What does GAN do?

The main focus for GAN (Generative Adversarial Networks) is to generate data from scratch, mostly images but other domains including music have been done. But the scope of application is far bigger than this. Just like the example below, it generates a zebra from a horse. In reinforcement learning, it helps a robot to learn much faster.

Generator and discriminator

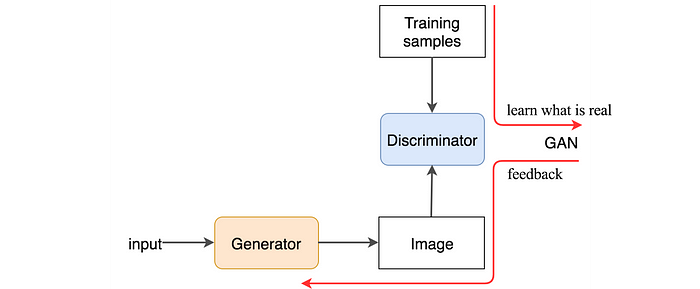

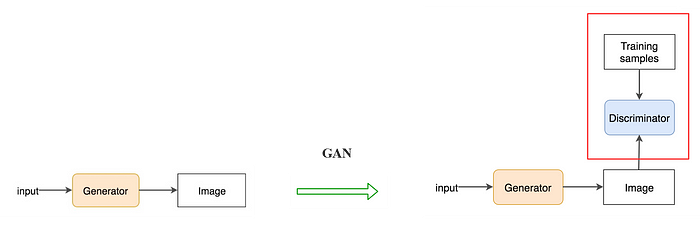

GAN composes of two deep networks, the generator, and the discriminator. We will first look into how a generator creates images before learning how to train it.

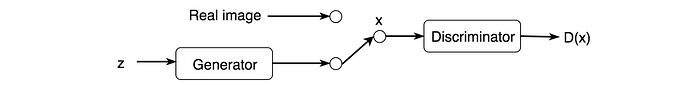

First, we sample some noise z using a normal or uniform distribution. With z as an input, we use a generator G to create an image x (x=G(z)). Yes, it sounds magical and we will explain it one-step at a time.

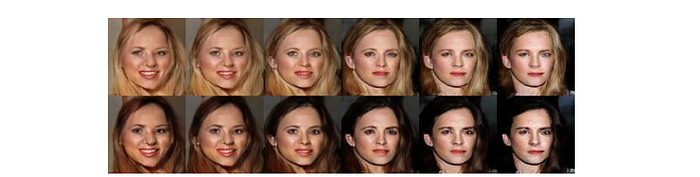

Conceptually, z represents the latent features of the images generated, for example, the color and the shape. In Deep learning classification, we don’t control the features the model is learning. Similarly, in GAN, we don’t control the semantic meaning of z. We let the training process to learn it. i.e. we do not control which byte in z determines the color of the hair. To discover its meaning, the most effective way is to plot the generated images and examine ourselves. The following images are generated by progressive GAN using random noise z!

We can change one particular dimension in z gradually and visualize its semantic meaning.

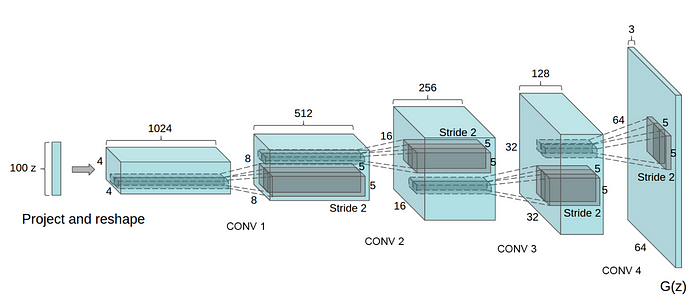

So what is this magic generator G? The following is the DCGAN which is one of the most popular designs for the generator network. It performs multiple transposed convolutions to upsample z to generate the image x. We can view it as the deep learning classifier in the reverse direction.

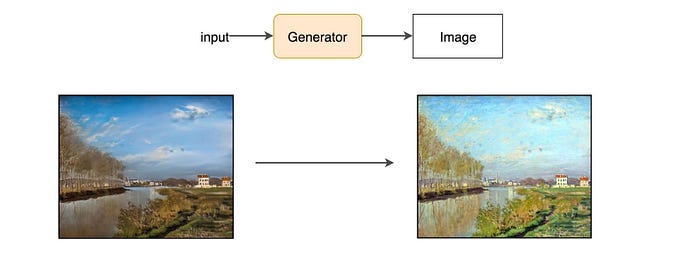

But a generator alone will just create random noise. Conceptually, the discriminator in GAN provides guidance to the generator on what images to create. Let’s consider a GAN’s application, CycleGAN, that uses a generator to convert real scenery into a Monet style painting.

By training with real images and generated images, GAN builds a discriminator to learn what features make images real. Then the same discriminator will provide feedback to the generator to create paintings that look like the real Monet paintings.

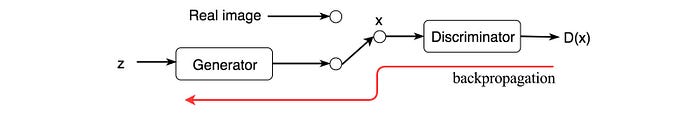

So how is it done technically? The discriminator looks at real images (training samples) and generated images separately. It distinguishes whether the input image to the discriminator is real or generated. The output D(X) is the probability that the input x is real, i.e. P(class of input = real image).

We train the discriminator just like a deep network classifier. If the input is real, we want D(x)=1. If it is generated, it should be zero. Through this process, the discriminator identifies features that contribute to real images.

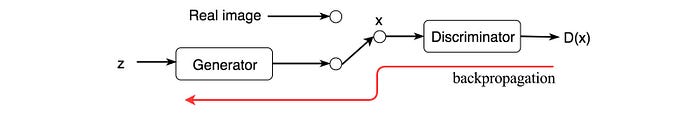

On the other hand, we want the generator to create images with D(x) = 1 (matching the real image). So we can train the generator by backpropagation this target value all the way back to the generator, i.e. we train the generator to create images that towards what the discriminator thinks it is real

We train both networks in alternating steps and lock them into a fierce competition to improve themselves. Eventually, the discriminator identifies the tiny difference between the real and the generated, and the generator creates images that the discriminator cannot tell the difference. The GAN model eventually converges and produces natural look images.

This discriminator concept can be applied to many existing deep learning applications also. The discriminator in GAN acts as a critic. We can plug the discriminator into existing deep learning solutions to provide feedback to make it better.

Backpropagation

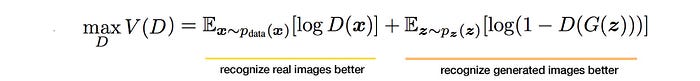

Now, we will go through some simple equations. The discriminator outputs a value D(x) indicating the chance that x is a real image. Our objective is to maximize the chance to recognize real images as real and generated images as fake. i.e. the maximum likelihood of the observed data. To measure the loss, we use cross-entropy as in most Deep Learning: p log(q). For real image, p (the true label for real images) equals to 1. For generated images, we reverse the label (i.e. one minus label). So the objective becomes:

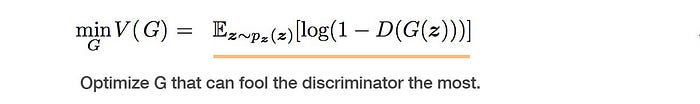

On the generator side, its objective function wants the model to generate images with the highest possible value of D(x) to fool the discriminator.

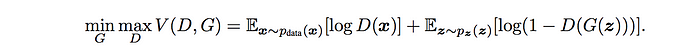

We often define GAN as a minimax game which G wants to minimize V while D wants to maximize it.

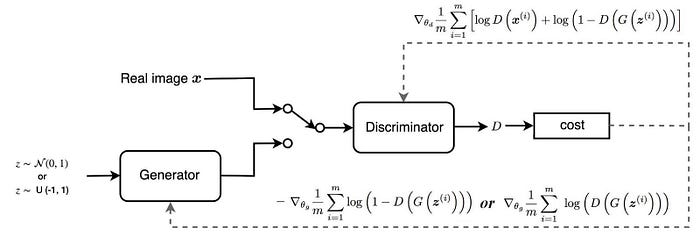

Once both objective functions are defined, they are learned jointly by the alternating gradient descent. We fix the generator model’s parameters and perform a single iteration of gradient descent on the discriminator using the real and the generated images. Then we switch sides. Fix the discriminator and train the generator for another single iteration. We train both networks in alternating steps until the generator produces good quality images. The following summarizes the data flow and the gradients used for the backpropagation.

The pseudo-code below puts everything together and shows how GAN is trained.

Generator diminished gradient

However, we encounter a gradient diminishing problem for the generator. The discriminator usually wins early against the generator. It is always easier to distinguish the generated images from real images in early training. That makes V approaches 0. i.e. - log(1 -D(G(z))) → 0. The gradient for the generator will also vanish which makes the gradient descent optimization very slow. To improve that, the GAN provides an alternative function to backpropagate the gradient to the generator.

More thoughts

In general, the concept of generating data leads us to great potential but unfortunately great dangers. There are many other generative models besides GAN. For example, OpenAI’s GPT-2 generates paragraphs that may look like what a journalist writes. Indeed, OpenAI decides not to open their dataset and trained model because of its possible misuse.

Yes, that is the basic concept that leads to a few thousand research papers. As always, simplicity works. Here, we cover the concept. But the potentials are far bigger than what we described. To know why it becomes so popular, let’s see some of the potential applications:

All the above applications are explained indepth here.

Source:Medium.com

***Thank You***

0 Response to "Generative Adversarial Networks (GANs)"

Post a Comment