Standardization

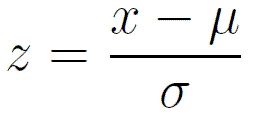

Standardization (or Z-score normalization) is the process of rescaling the features so that they’ll have the properties of a Gaussian distribution with μ=0 and σ=1 where μ is the mean and σ is the standard deviation from the mean; standard scores (also called z scores) of the samples are calculated as follows:

- K-nearest neighbors with a Euclidean distance measure if want all features to contribute equally.

- Logistic regression, SVM, perceptrons, neural networks.

- K-means.

- Linear discriminant analysis, principal component analysis, kernel principal component analysis.

Conclusion:

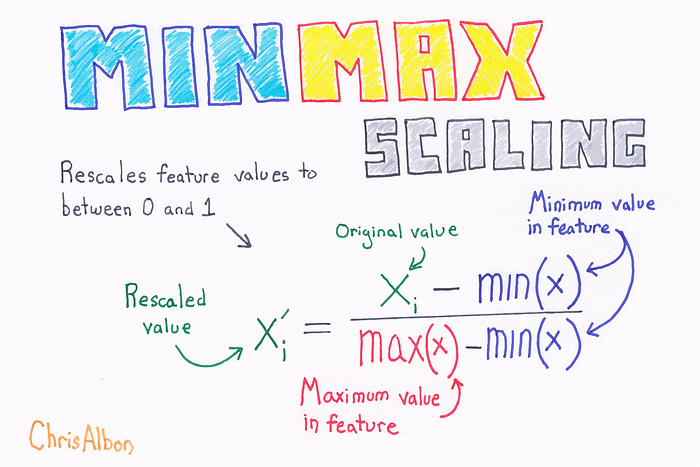

Standardization and Normalization are created to achieve a similar target, which is to build features that have similar ranges to each other and widely used in data analysis to help the programmer to get some clue out of the raw data. In statistics, Standardization is the subtraction of the mean and then dividing by its standard deviation. In Algebra, Normalization is the process of dividing of a vector by its length and it transforms your data into a range between 0 and 1.

0 Response to "Standardization VS Normalization"

Post a Comment