Gradient boosted trees have become the go-to algorithms when it comes to training on tabular data. Over the past couple of years, we’ve been fortunate to have not only one implementation of boosted trees, but several boosting algorithms– each with their own unique characteristics.

In this blog, we will try to determine whether there is one true killer algorithm that beats them all. We’ll compare XGBoost, LightGBM and CatBoost to the older GBM, measuring accuracy and speed on four fraud related datasets. We’ll also present a concise comparison among all new algorithms, allowing you to quickly understand the main differences between each. A prior understanding of gradient boosted trees is useful.

What’s their special thing?

The algorithms differ from one another in the implementation of the boosted trees algorithm and their technical compatibilities and limitations. XGBoost was the first to try improving GBM’s training time, followed by LightGBM and CatBoost, each with their own techniques, mostly related to the splitting mechanism. The packages of all algorithms are constantly being updated with more features and capabilities.

A few important things to know before we dive into details:

Community strength — From our experience, XGBoost is the easiest to work with when you need to solve problems you encounter, because it has a strong community and many relevant community posts. LightGBM has a smaller community which makes it a bit harder to work with or use advanced features. CatBoost also has a small community, but they have a great Telegram channel where questions are answered by the package developers in a short time.

Analysis ease — When training a model, even if you work closely with the documentation, you want to make sure the training was performed exactly like you meant it to. For example, you want to make sure the right features were treated as categorical and that the trees were built correctly. With this criteria, I find CatBoost and LightGBM to be more of a blackbox, because it is not possible in R to plot the trees and to get all of the model’s information (it is not possible to get the model’s categorical features list, for example). XGBoost is more transparent, allowing easy plotting of trees and since it has no built-in categorical features encoding, there are no possible surprises related to features type.

Model serving limitations — When choosing your production algorithm, you can’t just conduct experiments in your favorite environment (R/Python) and choose the one with the best performance, because unfortunately, all algorithms have their limitations. For example, if you use a Predictive Model Markup Language (PMML) for scoring in production, it is available for all algorithms. However, in CatBoost you are only allowed to use one-hot encoding for that. The reason is that default CatBoost representation for categorical features is not supported by PMML. Hence, I recommend taking these considerations into account before starting to experiment with your own data.

Now let’s go over their main characteristics:

Splits

Before learning, all algorithms create feature-split pairs for all features. Example for such pairs are: (age, <5), (age, >10), (amount, >500). These feature-split pairs are histogram-based built, and are used during learning as possible node splits. This preprocessing method is faster than the exact greedy algorithm, which linearly enumerates all the possible splits for continuous features.

lightGBM offers gradient-based one-side sampling (GOSS) that selects the split using all the instances with large gradients (i.e., large error) and a random sample of instances with small gradients. In order to keep the same data distribution when computing the information gain, GOSS introduces a constant multiplier for the data instances with small gradients. Thus, GOSS achieves a good balance between increasing speed by reducing the number of data instances and keeping the accuracy for learned decision trees. This method is not the default method for LightGBM, so it should be selected explicitly.

Catboost offers a new technique called Minimal Variance Sampling (MVS), which is a weighted sampling version of Stochastic Gradient Boosting. In this technique, the weighted sampling happens in the tree-level and not in the split-level. The observations for each boosting tree are sampled in a way that maximizes the accuracy of split scoring.

XGboost is not using any weighted sampling techniques, which makes its splitting process slower compared to GOSS and MVS.

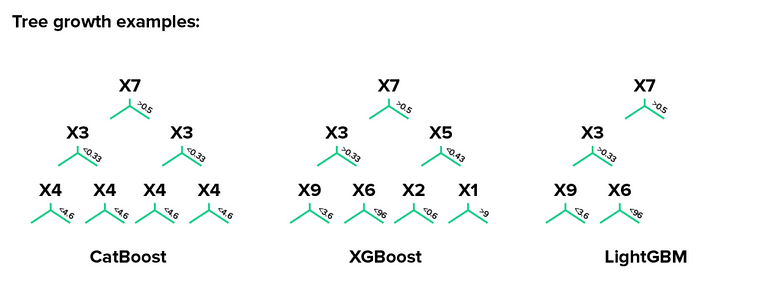

Leaf growth

Catboost grows a balanced tree. In each level of such a tree, the feature-split pair that brings to the lowest loss (according to a penalty function) is selected and is used for all the level’s nodes. It is possible to change its policy using the grow-policy parameter.

LightGBM uses leaf-wise (best-first) tree growth. It chooses to grow the leaf that minimizes the loss, allowing a growth of an imbalanced tree. Because it doesn’t grow level-wise, but leaf-wise, overfitting can happen when data is small. In these cases, it is important to control the tree depth.

XGboost splits up to the specified max_depth hyperparameter and then starts pruning the tree backwards and removes splits beyond which there is no positive gain. It uses this approach since sometimes a split of no loss reduction may be followed by a split with loss reduction. XGBoost can also perform leaf-wise tree growth (as LightGBM).

Missing values handling

Catboost has two modes for processing missing values, “Min” and “Max”. In “Min”, missing values are processed as the minimum value for a feature (they are given a value that is less than all existing values). This way, it is guaranteed that a split that separates missing values from all other values is considered when selecting splits. “Max” works exactly the same as “Min”, only with maximum values.

In LightGBM and XGBoost missing values will be allocated to the side that reduces the loss in each split.

Feature importance methods

Catboost has two methods: The first is “PredictionValuesChange”. For each feature, PredictionValuesChange shows how much, on average, the prediction changes if the feature value changes. A feature would have a greater importance when a change in the feature value causes a big change in the predicted value. This is the default feature importance calculation method for non-ranking metrics. The second method is “LossFunctionChange”. This type of feature importance can be used for any model, but is particularly useful for ranking models. For each feature the value represents the difference between the loss value of the model with this feature and without it. Since it is computationally expensive to retrain the model without one of the features, this model is built approximately using the original model with this feature removed from all the trees in the ensemble. The calculation of this feature importance requires a dataset.

LightGBM and XGBoost have two similar methods: The first is “Gain” which is the improvement in accuracy (or total gain) brought by a feature to the branches it is on. The second method has a different name in each package: “split” (LightGBM) and “Frequency”/”Weight” (XGBoost). This method calculates the relative number of times a particular feature occurs in all splits of the model’s trees. This method can be biased by categorical features with a large number of categories.

XGBoost has one more method, “Coverage”, which is the relative number of observations related to a feature. For each feature, we count the number of observations used to decide the leaf node for.

Categorical features handling

Catboost uses a combination of one-hot encoding and an advanced mean encoding. For features with low number of categories, it uses one-hot encoding. The maximum number of categories for one-hot encoding can be controlled by the one_hot_max_size parameter. For the remaining categorical columns, CatBoost uses an efficient method of encoding, which is similar to mean encoding but with an additional mechanism aimed at reducing overfitting. Using CatBoost’s categorical encoding comes with a downside of a slower model.

LightGBM splits categorical features by partitioning their categories into 2 subsets. The basic idea is to sort the categories according to the training objective at each split. From my experience, this method does not necessarily improve the LightGBM model. It has comparable (and sometimes worse) performance than other methods (for example, target or label encoding).

XGBoost doesn’t have an inbuilt method for categorical features. Encoding (one-hot, target encoding, etc.) should be performed by the user.

All packages are constantly being updated and improved, so I recommend following their releases, which sometimes include amazing new features.

***Thank You***

0 Response to "Boosting Algorithms Comparision."

Post a Comment