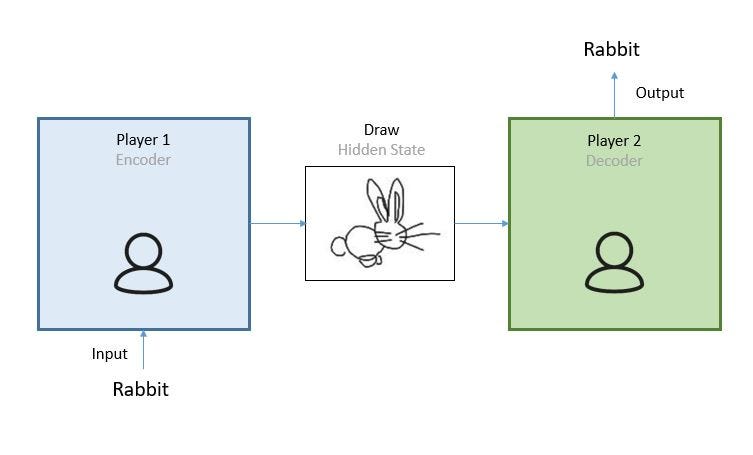

The best way to understand the concept of an encoder-decoder model is by playing Pictionary. The rules of the game are very simple, player 1 randomly picks a word from a list and needs to sketch the meaning in a drawing. The role of the second player in the team is to analyse the drawing and identify the word which it describes. In this example we have three important elements player 1(the person that converts the word into a drawing), the drawing (rabbit) and the person that guesses the word the drawing represents (player 2). This is all we need to understand an encoder decoder model, below we will build a comparative of the Pictionary game and an encoder decoder model for translating Spanish to English.

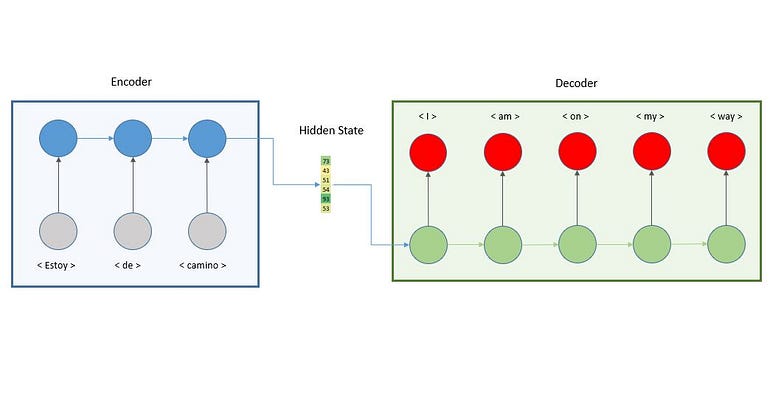

If we translate the above graph into machine learning concepts, we would see the below one. In the following sections we will go through each component.

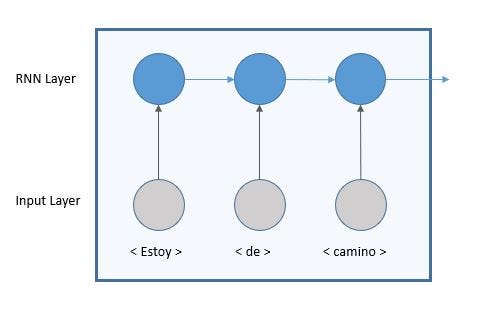

1-Encoder (Picturist)

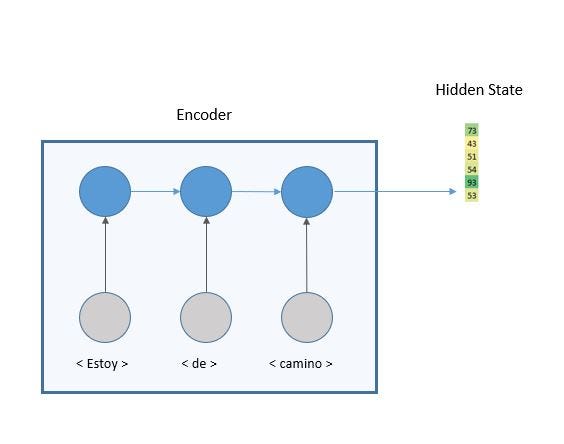

Encoding means to convert data into a required format. In the Pictionary example we convert a word (text) into a drawing (image). In the machine learning context, we convert a sequence of words in Spanish into a two-dimensional vector, this two-dimensional vector is also known as hidden state. The encoder is built by stacking recurrent neural network (RNN). We use this type of layer because its structure allows the model to understand context and temporal dependencies of the sequences. The output of the encoder, the hidden state, is the state of the last RNN timestep.

2-Hidden State (Sketch)

The output of the encoder, a two-dimensional vector that encapsulates the whole meaning of the input sequence. The length of the vector depends on the number of cells in the RNN.

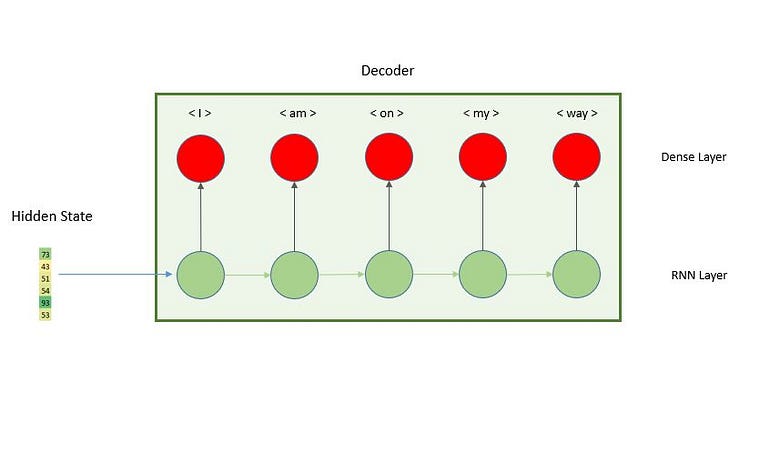

3-Decoder

To decode means to convert a coded message into intelligible language. The second person in the team playing Pictionary will convert the drawing into a word. In the machine learning model, the role of the decoder will be to convert the two-dimensional vector into the output sequence, the English sentence. It is also built with RNN layers and a dense layer to predict the English word.

Conclusion

One of the major advantages of this model is that the length of the input and output sequences may differ. This opens the door for very interesting applications such as video captioning or question and answer.

The major limit of this simple encoder decoder model is that all the information needs to be summarized in one dimensional vector, for long input sequences that can be extremely difficult to achieve. Having said that, understanding encoder decoder models is key for the latest advances in NLP because it is the seed for attention models and transformers.

0 Response to "Encoder Decoder Model"

Post a Comment